PCIe Intel NTB Networking

Some Intel Xeon server CPUs include a PCI Express Non Transparent Bridge (NTB) function. This can in combination with the Crystal Beach DMA and Intel VT-d technology be used to create a super low latency PCIe clustering solution. PCIe re-driver cards are used to reliably connect the servers using standard PCIe cables.

Dolphin has ported its eXpressWare software stack to natively support Intel NTB enabled CPUs. The main benefits are reduced latency and hardware cost.

Performance

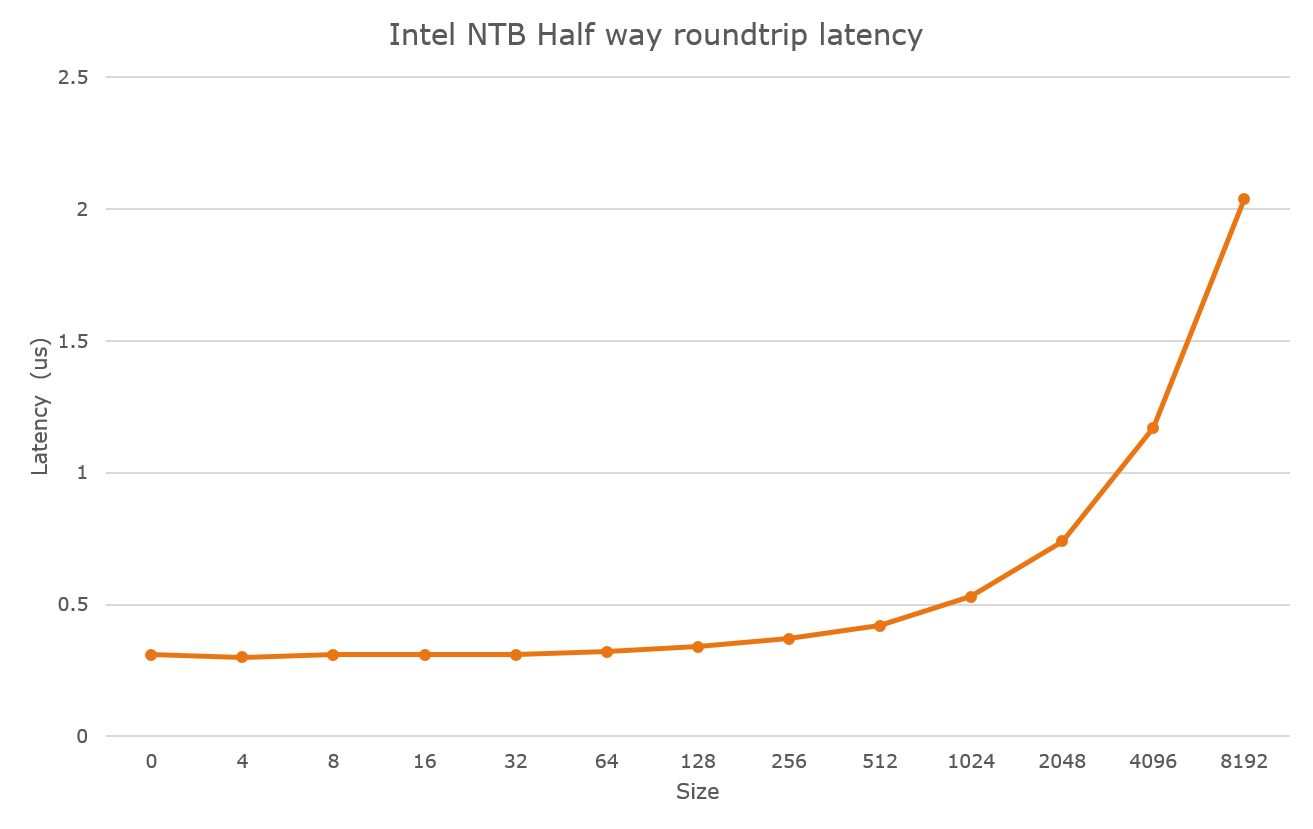

Dolphins standard SISCI API PIO latency benchmark scipp implements a full two way memory to memory ping pong transfer between two applications running on the servers. The full round trip latency for a 4 byte message is below 600 nanoseconds which means that the one way latency is below 300 nanoseconds.

Figure 1: scipp latency using Intel NTB.

Crystal Beach DMA transfers are also supported and well integrated with the eXpressWare software stack. DMA can be used to transfer larger blocks of data between local and remote memories and IO addresses.

Hardware configuration

To connect two servers, you need a transparent PCIe re-driver card that support this configuration. More systems can be interconnected using Dolphins IXS600 Gen3 PCIe switch. Please contact Dolphin for more details.

Availability

The Linux Intel NTB eXpressWare software is currently available for beta testers. Please e-mail pci-support@dolphinics.com if you would like to test the new capabilities. Please note that you need an Intel NTB enabled server to use this software. Please consult your system vendor or the BIOS users guide to ensure the server supports Intel NTB.