Preface

Clusters of commodity processors, memories and IO-devices interconnected by a fast remote memory access network is an attractive way to build economically large multiprocessor systems, clusters and embedded type control systems.

The SISCI API Project (Software Infrastructure for Shared-Memory Cluster Interconnects, "SISCI") has set itself as a goal to define a common Application Programming Interface ("API") to serve as a basis for porting major applications to heterogeneous multi vendor shared memory platforms.

The SISCI software and underlying drivers simplifies the process of building remote shared memory based applications. The built in resource management enables multiple concurrent SISCI programs to coexist and operate independent of each other.

PCI Express NTB networks created using PCI Express adapter cable cards or PCI Express enabled backplanes provides a non coherent distributed shared memory architecture which is ideal for a very efficient implementation of the SISCI API.

The functional specification of the API presented in this document is defined in ANSI C, but can also be utilized using C++. Using a lightweight wrapper, it can also be used by applications written in C#, Java and Python.

A detailed introduction to the SISCI API can be found at:

www.dolphinics.com/products/embedded-sisci-developers-kit.html

Introduction

This introductory chapter defines some terms that are used throughout the document and briefly describes the cluster architecture that represents the main objective of this functional specification.

Dolphins implementation of the SISCI API comes with an extensive set of example and demo programs that can be used as a basis for a new program or to fully understand the detailed aspect of SISCI programming. Newcomers to the SISCI API is recommended to study these programs carefully before making important architecture decisions. The demo and example programs are included with the software distribution.

The SISCI API is available in user space. Dolphin is offering the same functionality in kernel space, defined by the GENIF Kernel interface. The definition of the GENIF interface can be found in the source DIS/src/IRM_GX/drv/src/genif.h The SISCI driver itself, found in DIS/src/SISCI/src is a good example how to use the GENIF Kernel interface. Please note that on VxWorks the SISCI API is available for kernel applications only. RTPs are not supported.

The SISCI API is available and fully supported with Dolphins PCI Express NTB enabled hardware products. The software is also licensed to several OEM's providing their own PCI Express enabled products.

Dolphin is offering extensive support and assistance to migrate your application to the SISCI API. Please contact your sales representative for more information or email sisci-support@dolphinics.com.

Basic Concepts

Processor

- A collection of one or more CPUs sharing memory using a local bus.

Host

- A processor with one or more PCI Express enabled switches or pluggable adapters.

Adapter number

- A unique number identifying one or more local adapters or PCI switches.

Fabric

- The PCI Express network interconnecting the hosts. A Hosts may be connected by several parallel Fabrics.

NodeId

- A fabric unique number identifying a host on the network.

SegmentId

- A Host unique number identifying a memory segment within a host. A segment can be uniquely identified by its SegmentId and local NodeId.

PIO

- Programmed IO. A load or store operation performed by a CPU towards a mapped address.

DMA

- A system or network resource that can be used as an alternative to PIO do move data between segments. The SISCI API provides a set of functions to control the PCIe or system DMA engines.

Global DMA

- A SISCI DMA resource that can address any remote segment without being mapped into the local address space.

System DMA

- A SISCI DMA resource provided by the CPU system, e.g. Intel Crystal Beach DMA.

Local segment

- A memory segment located on the same processor where the application runs and accessed using the host memory interface. Identified by its SegmentId.

Remote segment

- A memory segment accessed via the fabric.

Connected segment

- A remote segment for which the IO base address and the size are known.

Mapped segment

- A local or remote (connected) segment mapped in the addressable space of a program.

Reflective Memory

- Hardware based broadcast / multicast functionality that simultaneously forwards data to all hosts in the fabric. Only with all configurations.

IO Device.

- A PCI or PCI Express card / device, e.g. a GPU, NVMe or FPGA attached to a host system or expansion box attached to the network.

Peer to Peer communication

- IO Devices communicating directly - device to device - without the use of the memory system. The devices can be local to a host or separated by the interconnect fabric.

Cluster Architecture

The basic elements of a cluster are a collection of hosts interconnected. A host may be a single processor or an SMP containing several CPUs. Adapters connect a host to a fabric. It is possible for a host to be connected to several fabrics. This allows for the construction of complex topologies (e.g. a two dimensional mesh). It may also be used to add redundancy and/or improve the bandwidth. Usually such architectures are obtained by using several adapters on one host.

Adapters often contain sub units that implement specific SISCI API functions such as transparent remote memory access, DMA, message mailboxes or interrupts. Most sub units have CSR registers that may be accessed locally via the host adapter interface or remotely over the PCI Express fabric.

SISCI API Data types

This Application Programming Interface covers different aspects of the shared memory technology and how it can be accessed by a user. The API items can then be grouped in different categories. The specification of functions and data types, presented in Chapter 3, is then preceded by a short introduction of these categories. For an easier consultation of the document, for each category a list of concerned API items is provided.

Data formats

Parameters and return values of the API functions, other than the data types introduced in the following sections, are expressed in the machine native data types. The only assumption is that int's are at least 32 bits and shorts are at least 16 bits. If, in the future, a specific size or endianness is needed, the Shared-Data Formats shall be used.

Descriptors

Working with remote shared memories, DMA transfers and remote interrupts, the major communication features that this API offers, requires the use of logical entities like devices, memory segments, DMA queues. Each of these entities is characterize by a set of properties that should be managed as a unique object in order to avoid inconsistencies. To hide the details of the internal representation and management of such properties to an API user, a number of descriptors have been defined and made opaque: their contents can be referenced with a handle and can be modified only through the functions provided by the API.

The descriptors and their meaning are the following:

sci_desc

It represents an SISCI virtual device, that is a communication channel with the driver. Many virtual devices can be opened by the same application. It is initialized by calling the function SCIOpen.

sci_local_segment

It represents a local memory segment and it is initialized when the segment is allocated by calling the function SCICreateSegment().

sci_remote_segment

It represents a segment residing on a remote node. It is initialized by calling either the function SCIConnectSegment().

sci_map

It represents a memory segment mapped in the process address space. It is initialized by calling either the SCIMapRemoteSegment() or SCIMapLocalSegment() function.

sci_sequence

It represents a sequence of operations involving communication with remote nodes. It is used to check if errors have occurred during a data transfer. The descriptor is initialized when the sequence is created by calling the function SCICreateMapSequence().

sci_dma_queue

It represents a chain of specifications of data transfers to be performed using the DMA engine available on the adapter. The descriptor is initialized when the chain is created by calling the function SCICreateDMAQueue().

sci_local_interrupt

It represents an instance of an interrupt that an application has made available to remote nodes. It is initialized when the interrupt is created by calling the function SCICreateInterrupt().

sci_remote_interrupt

It represents an interrupt that can be triggered on a remote node. It is initialized by calling the function SCIConnectInterrupt().

sci_local_data_interrupt

It represents an instance of an data interrupt that an application has made available to remote nodes. It is initialized when the interrupt is created by calling the function SCICreateDataInterrupt().

sci_remote_data_interrupt

It represents an data interrupt that can be triggered on a remote node. It is initialized by calling the function SCIConnectDataInterrupt().

Each of the above descriptors is an opaque data type and can be referenced only via a handle. The name of the handle type is given by the name of the descriptor type with a trailing _t.

No automatic cleanup of the resources represented by the above descriptors is performed, rather it should be provide by the API client*. Resources cannot be released (and the corresponding descriptors deallocated) until all the dependent resources have been previously released. The dependencies between resource classes can be derived by the function specifications.

Flags

Nearly all functions included in this API accept a flags parameter in input. It is used to obtain from a function invocation an effect that slightly differs from its default semantics (e.g. choosing between a blocking and a non-blocking version of an operation).

Each SISCI API function specification is followed by a list of accepted flags. Only the flags that change the default behavior are defined. Several flags can be OR'ed together to specify a combined effect. The flags parameter, represented with an unsigned int, has then to be considered a bit mask.

Some of the functions do not currently accept any flag. The parameter is nonetheless left in the specification, because it could become useful in view of future extensions, and the implementation shall check it to be 0.

A flag value starts with the prefix SCI_FLAG_.

Errors

Most of the API functions return an error code as an output parameter to indicate if the execution succeeded or failed. The error codes are collected in an enumeration type called sci_error_t. Each value starts with the prefix SCI_ERR_. The code denoting success is SCI_ERR_OK and an application should check that each function call returns this value.

In Chapter 3 each function specification is followed by a list of possible errors that are typical for that function. There are however common or very generic errors that are not repeated every time, unless they do not have a particular meaning for that function:

SCI_ERR_NOT_IMPLEMENTED

- the function is not implemented

SCI_ERR_ILLEGAL_FLAG

- the flags value passed to the function contains an illegal component. The check is done even if the function does not accept any flag (i.e. it accepts only the default value 0)

SCI_ERR_FLAG_NOT_IMPLEMENTED

- the flags value passed to the function is legal but the operation corresponding to one of its components is not implemented

SCI_ERR_ILLEGAL_PARAMETER

- one of the parameters passed to the function is illegal

SCI_ERR_NOSPC

- the function is unable to allocate some needed operating system resources

SCI_ERR_API_NOSPC

- the function is unable to allocate some needed API resources

SCI_ERR_HW_NOSPC

- the function is unable to allocate some hardware resources

SCI_ERR_SYSTEM

- the function has encountered a system error; errno should be checked

Each function requiring a local adapter number can generate the following errors:

SCI_ERR_ILLEGAL_ADAPTERNO

- the adapter number is out of the legal range

SCI_ERR_NO_SUCH_ADAPTERNO

- the adapter number is legal but it does not exist.

Each function requiring a node identifier can generate the following errors:

SCI_ERR_NO_SUCH_NODEID

- a node with the specified identifier does not exist

SCI_ERR_ILLEGAL_NODEID

- the node identifier is illegal

Other data types

Besides the data types specified in the previous sections others are used:

- sci_address_t

- sci_segment_cb_reason_t

- sci_dma_queue_state_t

- sci_sequence_status_t

General functions

In order to use correctly the network, an application is required to execute some operations like opening or closing a communication channel with the SISCI driver. For using effectively the network an application may also need some information about the local or a remote node.

Remote Shared Memory

The PCI Express technology implements a remote memory access approach that can be used to transfer data between systems and IO devices. An application can map into its own address space a memory segment actually residing on another node; then read and write operations from or to this memory segment are automatically and transparently converted by the hardware in remote operations. This API provides full support for creating and exporting local memory segments, for connecting to and mapping remote memory segments, for checking whether errors have occurred during a data transfer.

The functions included in this category actually concern three different aspects:

Memory management:

Connection management:

Segment events

Shared memory operations:

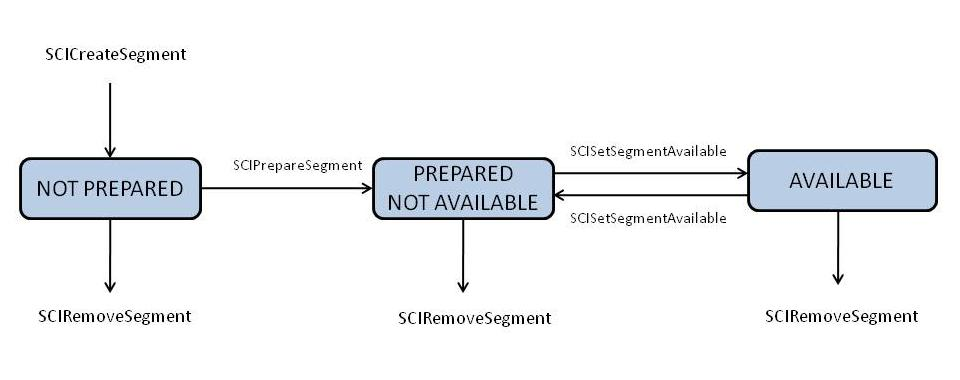

Memory and connection management functions affect the state of a local segment, whose state diagram is shown in the figure below.

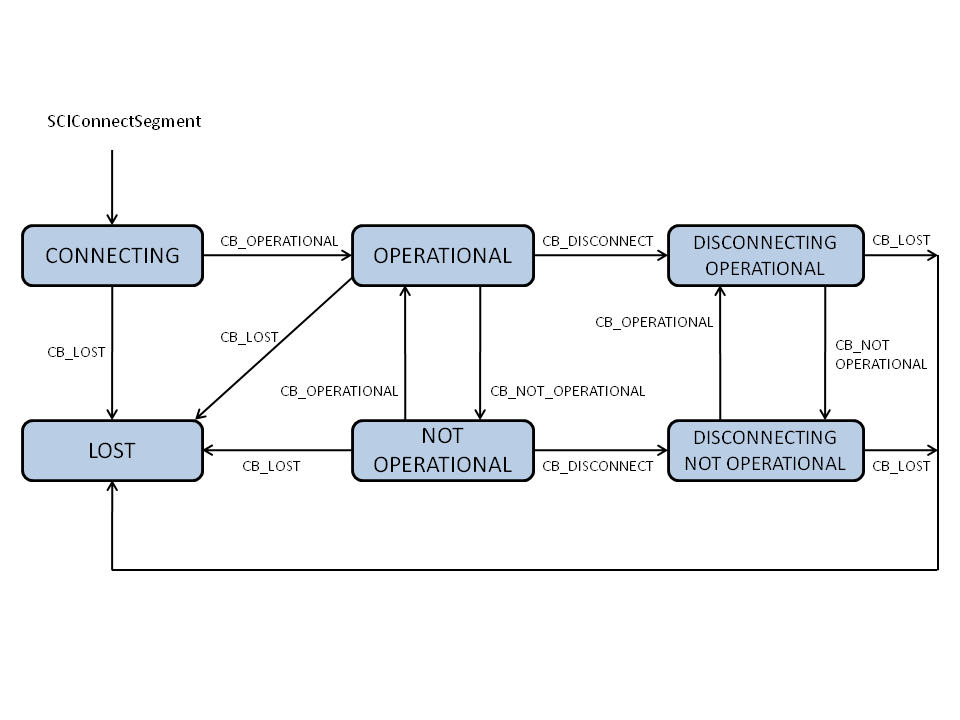

The state of a remote segment, shown in figure below, depends on what happens on the network or on the node where the segment physically resides. The transitions sci_segment_cb_reason_t are marked with callback reasons between the remote segment states.

The transitions are marked with callback reasons. SCIDisconnectSegment can be called from each state to exit the state diagram.

Direct Memory Access (DMA)

PIO (Programmed IO) has the lowest overhead and lowest latency accessing remote memory. The drawback of the PIO data transfers is that the CPU is busy reading or writing data from or to remote memory. An alternative is to use a DMA engine if this is available. The application specifies a queue of data transfers and passes it to the DMA engine. Then the CPU is free either to wait for the completion of the transfer or to do something else. In the latter case it is possible to specify a callback function that is invoked when the transfer has finished. DMA has high start up cost compared to using PIO and is normally only recommended for larger transfers.

DMA is an optional feature implemented with some PCIe chipsets only. Some systems also have a "System DMA" engine. The SISCI API DMA functionality are available on all platforms supporting PCIe DMA or System DMA that is integrated and supported with the SISCI driver stack. SISCI DMA transfer functions will fail if there is no supported DMA engine available.

Interrupts

Triggering an interrupt on a remote node should be considered a fast way to notify an application running remotely that something has happened. An interrupt is identified by a unique number and this is practically the only information an application gets when it is interrupted, either synchronously or asynchronously. The SISCI API contains two types if interrupt, with and without data.

Interrupts with no data:

Interrupts with data:

Device to Device transfers

The SISCI API supports setting up general IO devices to communicate directly, device to device - peer to peer communication. The alternative model, using the main memory as the intermediate buffer has significant overhead. The devices communicating can be placed in the same host or in different hosts interconnected by the shared memory fabric.

Peer to peer functionality is an optional PCI Express feature, please ensure your computer supports peer to peer transfers (Ask you system vendor).

Please consult the rpcia.c example program found in the software distribution for more information on how to set up Device to Device transfers or access remote physical memory devices.

Reflective Memory / Multicast

The SISCI API supports setting up Reflective Memory / Multicast transfers.

The Dolphin PCI Express IX, MX and PX product families supports multicast operations as defined by the PCI Express Base Specification 2.1. Dolphin has integrated support for this functionality into the SISCI API specification to make it easily available to application programmers. The multicast functionality is also available with some OEM hardware configurations. (Please check with you hardware vendor if multicast is available for your configuration). SISCI functions will typically return an error if the multicast functionality is not available.

There are no special functions to to set up the Reflective Memory, programmers just need to use the flag SCI_FLAG_BROADCAST when SCICreateSegment() and SCIConnectSegment() is called.

For reflective memory operations, the NodeId parameter to SCIConnectSegment() should be set to DIS_BROADCAST_NODEID_GROUP_ALL

PCI Express based multicast uses the server main memory or a PCI Express device memory (e.g. a FPGA, GPU buffer) as the multicast memory.

PCI Express multicast transfers can be generated by the CPU, a DMA controller or any type of PCIe device that can generate PCIe TLPs.

Please consult the reflective memory white papers available at https://www.dolphinics.com/support/whitepapers.html and example programs found in the software distribution for more information on how to use the reflective memory functionality.

Support Functions

The SISCI API provide support / helper functions that may be useful.

SmartIO

NOTE: The SmartIO SISCI API extension is currently not finalized and may change in future releases without warning!

The SmartIO functionality was introduced with eXpressWare 5.5.0 and is currently only available with Linux, but may be ported to other operating systems in the future.

SmartIO is currently supported with all Dolphins PXH and MXH NTB enabled PCIe cards and licensed to selected OEM solutions based on Broadcom or Microsemi PCIe chipsets.

Please contact Dolphin if you interested in the SmartIO functionality.

The SISCI API SmartIO extension exposes features from the SmartIO module, if present. These functions are appropriate to use in order to gain low level access to devices. They can be used to write user space drivers using SISCI. The functions will work on devices anywhere in the cluster and multiple nodes can access the same device at the same time. In general, the SmartIO API will reuse 'normal' SISCI features where possible. For instance, device BAR registers are exposed as segments. The main SmartIO module must be configured in order for this API to function.

SmartIO devices are represented in SISCI as sci_device_t handles. This handle is initialized by borrowing the device, and released when the device is returned.

Once a device has been borrowed, it's BARs can be accessed through a remote segment. To connect to these segments, the following function must be used. After connecting the segment is to be treated as any other remote segment.

The device may also perform DMA to both local and remote segments. This must be set up by functions that map the given segment and provide the caller with the address to be used by the device to reach the given segment.

Device interrupts can be attached to interrupts with associated handlers.

SmartIO helper functions that may be helpful

Special Functions

Some special functions exists, these are available for all platforms and operating systems, but may only be needed for special environments or purposes.

The following function can be used to synchronize the CPU memory cache on systems without coherent IO (Nvidia Jetson Tegra TK1,TX1,TX2).